As noted in my post about Lustre Stats with Graphite and Logstash we are huge fans of the ELK (Elastic Search, Logstash, Kibana) stack. In that last example we didn't use the full ELK stack but in this example we are going to use ELK what it was meant for, log parsing and dash-boarding.

We run a GridFTP server using the Globus.org packages. GridFTP for those who don't know is a better performing way to transfer data around. If you want to setup GridFTP please use the globus.org Globus Connect Server, its much easier than setting up the certificate system, and it quickly becoming the standard auth and identity provider for national research systems.

GridFTP logs each transfer with your server. What I want to know his where, who, and how much is going though the server. I have been running this setup for a while now, but it could use some refining. You can find my full logstash config as of this writing at Gist.

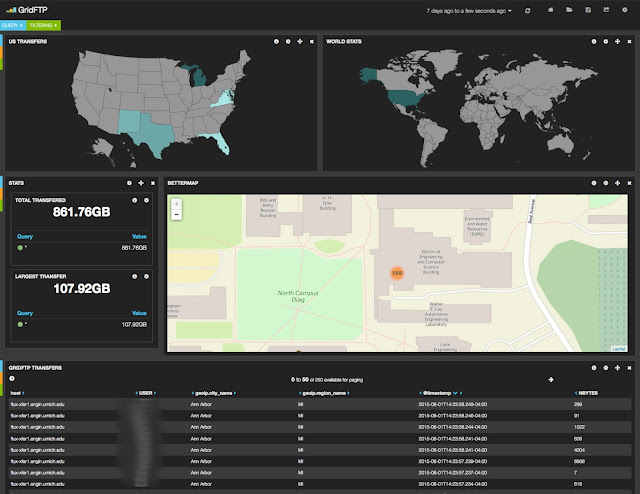

First the results:

Logstash has a number of filters that makes this easier. We use the regular Grok filter to match the transfer stats lines from the GridFTP log. You could modify this to capture the entire log in Elastic search for archive reasons. Then the kv (key value) filter does a wonder on all of the log files key=value entries doing most of our work for us.

I have to use a few grok filters to get the IP of the remote server isolated, but once done logstash has a built in geoip filter that tags all the transfers with geolocation information which lets the maps be created. Oh and in the dashboard those maps are interactive, so you can sort transfers just from another country by clicking on that country, or adding a direct filter for the country code, zipcode, etc. Really handy.

Individual transfers are also mapped by what campus they are coming from if coming from a University address. Our sub nets across the three campuses are known and published, so we use the cidr filter to add a tag for each campus, so we can look at traffic from a specific campus. Again really handy, and would love to get contributions to see what traffic comes from internet2 / MiLR and the commodity internet.

A few warnings, the bandwidth calculation is commented out for a reason. It works, but not all GridFTP log entries are complete to do the calculation, this makes ruby get angry and makes logstash hang.

So it was very easy to use logstash to understand the GridFTP log files, then the rest of the ELK stack let us quickly make dashboards for our file transfers.

I was inspired to write this after thinking there must be an easier way to handle GridFTP logs after a presentation at XSEDE 14 where the classic, scripts, plus copy log files, system was employed. The solution here is near real-time, and we found to be very durable.

No comments:

Post a Comment